|

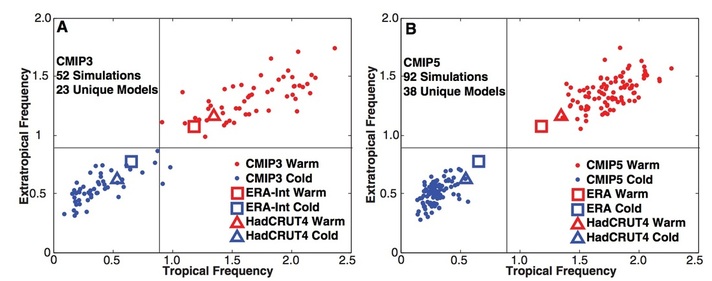

The most interesting short paper I have read in the past few years is the pre-press article by mathematician Kyle Swanson: "Emerging selection bias in large-scale climate change simulations" (behind the Wiley paywall). In it, Swanson shows that the ensemble of models most popularly used for scientists exhibit a selection bias towards accurately capturing some phenomena (say the Arctic sea ice extent). In turn, this has resulted in (for other important phenomena) an intra-model spread which has decreased through the multi-year model refinement process, but whose mean has shifted further away from reality. In other words, there is a selection pressure on climate models which seems to be guiding them to converge upon the same result... in the words of evolutionary biology, researchers belief that they are "getting something right" is what is paying for these evolutionary changes. Yet according to Swanson (and a privately held belief of many other researchers) this satisfaction is misplaced. In the paper, Swanson retells Feynman's story of what happened after Robert Milikan miscalculated the charge on the electron. When they got a number that was too high above Millikan’s, they thought something must be wrong–and they would look for and find a reason why something might be wrong. When they got a number close to Millikan’s value they didn’t look so hard. And so they eliminated the numbers that were too far off, and did other things like that (Just to establish a baseline for this criticism, this selection bias is related to model-predicted or model-diagnosed patterns, and cannot be used to criticize climate change as a scientific fact. That the world is warming has been observed, evidenced analytically, and demonstrated in models over a wide range of complexities. Enough about that.)  The picture is a figure from his paper, showing how the model spread of the frequency of anomalously warm and cold months changes from the CMIP3 (~2007) and CMIP5 (~2010) intercomparison projects. This is plotted against "observations" from the reanalysis products HadCRUT and ER. The trend is obvious: the models tend to get cluster together but their mean does not shift towards the actual data. For a long time, the rub on climate models was that they had very low precision, model spreads were large and uncertainties were high. The unmentioned benefit for this was in accuracy: real data often fell within error bars of prediction. Now we have increased model precision at the expense of accuracy, and in the hierarchy of model outcomes, accuracy should be placed above precision. Better to be reasonably sure than to be confidently wrong. This is troubling: the implication being that modellers are providing a selection pressure for results which is (in a second sense) "unnatural", and climate models are becoming increasingly covariant (perhaps in response to "improvements" in physical parameterizations that are added to the entire ensemble), but are not becoming more skillfull. Not unlike the cartoon rabbit who puts his finger in the dike, only to see a new leak spring forth somewhere else, the rush to improve climate models in certain areas has resulted in even larger problem. Comments are closed.

|

AuthorOceanographer, Mathemagician, and Interested Party Archives

March 2017

Categories

All

|

RSS Feed

RSS Feed